Learn Machine | Machine Learning | Types of Machine Learning Algorithms | What is Machine Learning? | Google Machine Learning | AI Vs Machine Learning | Machine Learning Interview Questions and Answers | Machine Learning Certification | Python Machine Learning

Machine learning is a field of computer science that involves the development of algorithms and models that allow computers to learn from data and make predictions or decisions based on that learning. It is a subset of artificial intelligence that focuses on the development of systems that can learn from and make decisions or predictions based on data.

Types of Machine Learning Algorithms

There are several types of machine learning algorithms, including supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning.

Supervised learning

In supervised learning, the algorithm is trained on labeled data, meaning that the data is already classified or labeled with the correct answer. The algorithm then learns from this labeled data to make predictions on new, unlabeled data.

Unsupervised learning

In unsupervised learning, the algorithm is trained on unlabeled data, and it must find patterns or relationships within the data on its own.

Semi-supervised learning

Semi-supervised learning is a combination of supervised and unsupervised learning, where some of the data is labeled and some is not.

Reinforcement learning

Reinforcement learning, the algorithm learns by interacting with an environment and receiving feedback in the form of rewards or punishments.

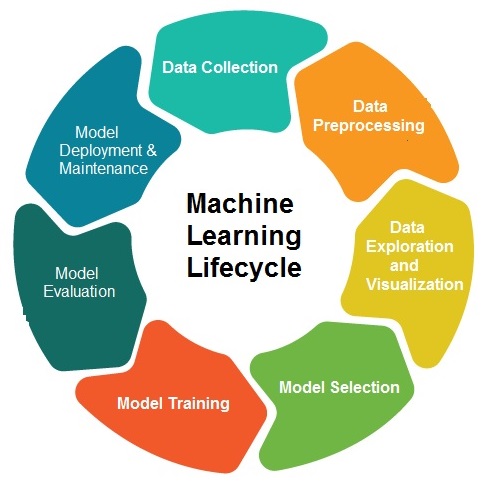

AI / Machine Learning lifecycle

Data Collection

Data Preprocessing

Data Exploration and Visualization

Model Selection

Model Training

Model Evaluation

Model Deployment

Model Maintenance

Machine Langauge Applications and Examples

Machine learning has a wide range of applications across various fields, including:

Image and video recognition:

Machine learning algorithms are used in image and video recognition systems to identify and categorize objects in images and videos. For example, facial recognition systems use machine learning algorithms to identify individuals from images or videos.

Natural language processing:

Machine learning algorithms are used in natural language processing systems to understand and generate human language. Examples of this include chatbots, virtual assistants, and sentiment analysis.

Fraud detection:

Machine learning algorithms are used in fraud detection systems to identify fraudulent transactions or activities. For example, credit card companies use machine learning to detect fraudulent transactions based on the user's transaction history.

Recommendation systems:

Machine learning algorithms are used in recommendation systems to provide personalized recommendations to users based on their preferences and behavior. Examples of this include movie or music recommendation systems.

Autonomous vehicles:

Machine learning algorithms are used in autonomous vehicles to enable them to perceive and navigate their environment. For example, self-driving cars use machine learning algorithms to detect and classify objects in their surroundings.

Healthcare:

Machine learning algorithms are used in healthcare to predict patient outcomes and assist in diagnoses. For example, machine learning can be used to predict the likelihood of a patient developing a certain disease based on their medical history and genetic information.

Financial analysis:

Machine learning algorithms are used in financial analysis to predict stock prices and detect fraudulent behavior. For example, machine learning can be used to predict the stock market based on historical data and current events

Hardware for AI

Artificial Intelligence (AI) requires specialized hardware that can process large amounts of data quickly and efficiently. Some of the hardware commonly used for AI includes:

Central Processing Units (CPUs):

CPUs are the primary processing units in computers and are used for general-purpose computing. They are still widely used in AI systems, especially for training models, as they offer good performance and flexibility.

Graphics Processing Units (GPUs):

GPUs are specialized processors designed to handle complex graphical calculations, but they are also well-suited to handling the mathematical computations required by AI systems. They are commonly used in deep learning and neural network training, as they offer high performance and efficiency.

Field-Programmable Gate Arrays (FPGAs):

FPGAs are customizable integrated circuits that can be programmed to perform specific tasks. They are commonly used in AI systems for real-time data processing and can be configured to handle specific types of computations.

Application-Specific Integrated Circuits (ASICs):

ASICs are integrated circuits designed for specific applications, and they are increasingly being used in AI systems. They are highly specialized and can be designed to perform specific AI computations efficiently.

Tensor Processing Units (TPUs):

TPUs are a type of ASIC designed specifically for deep learning and neural network processing. They are highly optimized for matrix operations, which are a key component of these algorithms, and are capable of processing large amounts of data quickly.

Popular Makes and Models of AI System

There are a wide range of AI systems available on the market today, each with their own unique features and capabilities. Some of the most popular makes and models of AI systems include:

TensorFlow:

TensorFlow is an open-source machine learning platform developed by Google. It is widely used for deep learning and neural network training and is capable of running on a wide range of hardware, including CPUs, GPUs, and TPUs.

PyTorch:

PyTorch is an open-source machine learning library developed by Facebook. It is known for its ease of use and flexibility and is commonly used for natural language processing and computer vision applications.

Keras:

Keras is a high-level neural network library that can run on top of TensorFlow, CNTK, or Theano. It is designed to be user-friendly and easy to use and is commonly used for deep learning applications.

Amazon SageMaker:

Amazon SageMaker is a cloud-based machine learning platform that provides a range of tools and services for building, training, and deploying machine learning models. It is designed to be scalable and easy to use, making it a popular choice for businesses and developers.

Microsoft Azure

Machine Learning: Azure Machine Learning is a cloud-based machine learning platform developed by Microsoft. It provides a range of tools and services for building, training, and deploying machine learning models and is known for its ease of use and scalability.

IBM Watson:

IBM Watson is a suite of AI tools and services developed by IBM. It includes a range of tools for natural language processing, computer vision, and other AI applications and is designed to be easy to use and scalable.

Configuration Techniques for AI

Configuration techniques for AI involve selecting the appropriate algorithms, model architectures, and hyperparameters to achieve optimal performance for a given task. Some of the common techniques for configuring AI systems include:

Algorithm selection:

The choice of algorithm can have a significant impact on the performance of an AI system. The most appropriate algorithm will depend on the nature of the task, the type and amount of data available, and the computational resources available.

Model architecture:

The architecture of the model can have a significant impact on its performance. For example, a convolutional neural network (CNN) is commonly used for image recognition tasks, while a recurrent neural network (RNN) is often used for natural language processing tasks.

Hyperparameter tuning:

Hyperparameters are parameters that are set prior to training the model and can have a significant impact on its performance. Examples of hyperparameters include learning rate, batch size, and regularization. Hyperparameter tuning involves selecting the optimal values for these parameters to achieve the best performance.

Transfer learning:

Transfer learning involves using a pre-trained model as a starting point for a new task. This can be particularly effective when there is limited data available for the new task, as the pre-trained model can provide a starting point for the new model to build upon.

Ensemble methods:

Ensemble methods involve combining multiple models to improve overall performance. This can be particularly effective when different models have different strengths and weaknesses, as the ensemble can leverage the strengths of each model.

Feature engineering:

Feature engineering involves selecting or creating features from the raw data that are most relevant to the task at hand. This can improve the performance of the model by providing it with more relevant information.

Machine Learning Course

There are many online courses available that can help to get started. Here are a few popular options:

Machine Learning by Andrew Ng on Coursera:

Machine Learning by Andrew Ng on Coursera course is widely regarded as one of the best introductions to machine learning. It covers a wide range of topics, including linear regression, logistic regression, neural networks, and support vector machines, and includes hands-on programming assignments. The course is self-paced and can be taken for free or with a certificate for a fee.

Deep Learning Specialization by Andrew Ng on Coursera:

Deep Learning Specialization by Andrew Ng on Coursera is a series of courses that covers the fundamentals of deep learning, including neural networks, convolutional neural networks, and recurrent neural networks. The courses include programming assignments and a final project. The series can be taken for free or with a certificate for a fee.

Applied Data Science with Python Specialization on Coursera:

Applied Data Science with Python Specialization on Coursera is a series of courses that covers a wide range of topics related to data science, including machine learning. The courses include hands-on programming assignments and a final project. The series can be taken for free or with a certificate for a fee.

Machine Learning Crash Course by Google:

Machine Learning Crash Course by Google is a free, self-paced course that covers the basics of machine learning, including supervised and unsupervised learning, feature engineering, and model evaluation. The course includes interactive exercises and programming assignments.

Introduction to Machine Learning with Python by DataCamp:

Introduction to Machine Learning with Python by DataCamp is a self-paced course that covers the basics of machine learning using Python. It includes hands-on programming exercises and covers topics such as linear regression, logistic regression, and decision trees.

Minimum Education qualification for ML course

There is no specific minimum education qualification required for taking a course in machine learning. However, a strong foundation in mathematics and programming can be helpful. Here are some recommended background skills and knowledge:

Mathematics:

A good understanding of linear algebra, calculus, probability theory, and statistics is important for understanding the concepts and algorithms used in machine learning.

Programming:

Knowledge of a programming language such as Python, R, or MATLAB is essential for implementing machine learning algorithms and working with datasets.

Data Structures and Algorithms:

Knowledge of data structures and algorithms is important for optimizing and improving machine learning models.

Familiarity with Machine Learning Concepts:

A basic understanding of the key concepts and terminologies in machine learning, such as supervised and unsupervised learning, overfitting, underfitting, and regularization, is important for learning the advanced topics in machine learning.

Critical Thinking and Problem-Solving Skills:

Machine learning involves solving complex problems, and the ability to think critically and creatively is important for developing effective machine learning models.

Job prospects for Machine Learning

The job prospects for machine learning (ML) are very promising, as ML is becoming increasingly important in many industries. Here are some examples of job roles that are related to machine learning:

Machine Learning Engineer:

A machine learning engineer is responsible for designing, building, and deploying machine learning models and systems. They work with large datasets and use algorithms and statistical models to train machines to perform specific tasks.

Data Scientist:

Data scientists analyze and interpret complex data using machine learning algorithms and other statistical tools. They work with large datasets and use machine learning techniques to uncover insights and make predictions.

Artificial Intelligence Research Scientist:

An AI research scientist develops new machine learning models and algorithms to solve complex problems. They work on cutting-edge research and development projects in the field of AI and machine learning.

Business Intelligence Developer:

Business intelligence developers create data models and dashboards that help businesses make data-driven decisions. They use machine learning algorithms and other analytical tools to analyze large datasets and uncover insights that can improve business performance.

Robotics Engineer:

Robotics engineers design and develop intelligent machines and robots that can perform tasks autonomously. They use machine learning algorithms and other AI techniques to train robots to learn from their environment and make decisions.

Computer Vision Engineer:

A computer vision engineer is responsible for developing computer vision algorithms and systems that enable machines to "see" and interpret visual data. They use machine learning algorithms to train machines to recognize objects, patterns, and other visual cues.

Machine Learning Engineer Salary

The salary of a machine learning engineer varies depending on various factors such as experience, location, industry, and company size. According to Glassdoor, the average base salary of a machine learning engineer in the United States is around $115,000 per year. However, this can range from around $80,000 per year for entry-level positions to over $150,000 per year for senior positions in larger companies.

In addition to the base salary, machine learning engineers may also receive bonuses, equity, and other benefits. The salary can also vary based on the industry, with some of the highest-paying industries for machine learning engineers including finance, healthcare, and technology.

It is important to note that the salary of a machine learning engineer may also vary depending on the location, with cities such as San Francisco, Seattle, and New York typically offering higher salaries due to the higher cost of living.

Business ideas for ML

There are many business ideas that can be built around machine learning (ML) technologies. Here are some examples:

Personalized recommendations:

Use ML algorithms to analyze customer behavior and preferences, and provide personalized product or content recommendations. This can be applied to e-commerce websites, streaming services, and many other types of businesses.

Predictive maintenance:

Use ML algorithms to analyze data from machines and equipment, and predict when maintenance or repairs will be needed. This can help reduce downtime and maintenance costs, and improve overall efficiency.

Fraud detection:

Use ML algorithms to detect and prevent fraudulent activities, such as credit card fraud, insurance fraud, and identity theft. This can be applied to financial institutions, e-commerce websites, and many other businesses.

Sentiment analysis:

Use ML algorithms to analyze social media and other online sources, and determine customer sentiment towards a brand or product. This can help businesses understand their customers better and make more informed decisions.

Autonomous vehicles:

Develop autonomous vehicles that use ML algorithms to learn from their environment and make decisions. This can be applied to transportation and logistics industries, as well as other industries that rely on vehicles and transportation.

Healthcare:

Use ML algorithms to analyze medical data and identify patterns and trends that can help diagnose diseases and develop more effective treatments. This can be applied to healthcare providers, pharmaceutical companies, and many other related industries.

Natural language processing:

Develop chatbots and virtual assistants that use ML algorithms to understand and respond to customer queries and requests. This can be applied to customer service and support functions in many different industries

ML Trending Countries

Machine learning is a rapidly growing field with many countries actively investing in its development and research. Here are some countries that are currently trending in machine learning:

United States:

The United States has been a leader in machine learning research and development for many years. It is home to some of the world's top research institutions, such as MIT, Stanford, and Carnegie Mellon University. The country is also home to many leading tech companies, such as Google, Amazon, and Microsoft, that are actively working on machine learning projects.

China:

China has been investing heavily in machine learning and artificial intelligence in recent years, with the government setting ambitious goals for the development of these technologies. The country is home to many leading tech companies, such as Baidu, Alibaba, and Tencent, that are actively working on machine learning projects.

Canada:

Canada has a thriving machine learning ecosystem, with many top research institutions and companies in the field. The country is home to the Vector Institute for Artificial Intelligence, a leading research center dedicated to machine learning, as well as companies such as Element AI and DeepMind.

United Kingdom:

The United Kingdom has a strong history of research and development in machine learning, with many top research institutions such as the University of Cambridge, Imperial College London, and the University of Oxford. The country is also home to many leading companies in the field, such as DeepMind, an AI research lab owned by Google.

Germany:

Germany has a growing machine learning ecosystem, with many universities and research institutions actively working on machine learning projects. The country is also home to many leading tech companies, such as Siemens, Bosch, and SAP, that are investing in machine learning and AI.

Threats due to ML

While machine learning (ML) has the potential to revolutionize many fields, there are also some potential threats associated with this technology. Here are a few examples:

Bias:

Machine learning models are only as good as the data they are trained on. If the training data is biased in some way, the model may also be biased, leading to unfair or discriminatory decisions. For example, a facial recognition system trained on a dataset that is not representative of the entire population may perform poorly for certain demographic groups.

Job displacement:

As machine learning algorithms become more sophisticated, they may be able to automate tasks that were previously done by humans. This could lead to job displacement in certain industries, particularly those that involve routine or repetitive tasks.

Security threats:

Machine learning algorithms rely on vast amounts of data to learn and make decisions. If this data is compromised or manipulated, it could lead to security threats or attacks. For example, an attacker could manipulate data used to train a self-driving car's computer vision system, causing it to misinterpret road signs or obstacles.

Privacy concerns:

Machine learning algorithms can process large amounts of personal data, such as social media posts, search history, and online shopping behavior. This raises concerns about privacy, particularly if this data is used without individuals' knowledge or consent.

Lack of transparency:

Many machine learning algorithms are highly complex and difficult to interpret. This lack of transparency can make it difficult for users to understand how the algorithm is making decisions, and can also make it difficult to identify and correct errors or biases.

Use of ML in routine day to day life

Machine learning (ML) is already being used in a variety of ways in our daily lives, sometimes without us even realizing it. Here are some examples:

Personalized recommendations:

ML algorithms are used to power personalized recommendation systems on platforms such as Amazon, Netflix, and Spotify. These systems analyze users' past behavior and preferences to suggest new products, movies, TV shows, or songs that they might enjoy.

Fraud detection:

ML algorithms are used by banks and credit card companies to detect fraudulent transactions. These systems analyze patterns in users' spending behavior and can flag suspicious transactions for further review.

Voice assistants:

Voice assistants like Siri, Alexa, and Google Assistant use ML algorithms to understand and respond to users' requests. These systems rely on natural language processing (NLP) to recognize spoken words and phrases, and use machine learning to improve over time.

Image and speech recognition:

ML algorithms are used to power image and speech recognition systems, which are becoming increasingly accurate. These systems can be used for tasks such as identifying objects in images, transcribing speech to text, or translating between languages.

Spam filtering:

Email providers like Gmail and Outlook use ML algorithms to filter out spam messages. These systems analyze the content of incoming emails and can identify patterns that are commonly associated with spam.

Areas where ML not to be used

While machine learning (ML) has many potential applications, there are also some areas where it may not be appropriate or effective. Here are a few examples:

Safety-critical systems:

Machine learning algorithms may not be suitable for safety-critical systems such as those used in aviation, nuclear power plants, or medical devices. These systems require high levels of reliability and predictability, which may not be possible with current ML algorithms.

Legal decision-making:

While ML algorithms may be able to assist with legal research or document analysis, they may not be suitable for making legal decisions, such as those made by judges or juries. These decisions require a high degree of nuance and context, which may not be easily captured by a machine learning algorithm.

Moral or ethical decision-making:

ML algorithms may not be able to make moral or ethical decisions, as these decisions often require subjective judgment and value judgments. For example, a self-driving car may be able to make decisions based on its programmed rules, but it may not be able to make decisions about who to prioritize in a potential accident scenario.

Situations with limited data:

Machine learning algorithms require large amounts of data to learn and make predictions. In situations where data is limited or incomplete, ML algorithms may not be effective.

Human interactions:

While ML algorithms can be used for tasks such as customer service or chatbots, they may not be able to replicate the complexity and nuance of human interactions. For example, a customer service chatbot may not be able to provide the same level of empathy or emotional support as a human customer service representative.

Decision tree in Machine Learning

Decision trees are a popular machine learning algorithm used for classification and regression problems. They are a type of supervised learning algorithm that is used for both categorical and continuous input and output variables. Decision trees are easy to understand and interpret, making them a popular choice for many applications.

In a decision tree, the input data is split into a tree-like structure, where each node represents a decision based on one or more input variables. The tree is built recursively by splitting the data into smaller subsets based on the most informative input variable at each node. The goal is to create a tree that predicts the output variable with the highest accuracy.

The tree is constructed in a way that maximizes information gain at each level, which is calculated by measuring the decrease in impurity after a split. The impurity can be measured using different metrics such as Gini Index, Entropy, or Classification Error. The metric used depends on the type of problem and the desired outcome.

Once the tree is constructed, new data points can be classified by following the path from the root to a leaf node. The leaf node represents the final decision about the output variable. Decision trees can also be pruned to avoid overfitting, which occurs when the tree is too complex and fits the training data too closely, leading to poor generalization to new data.

Decision trees have several advantages, including their interpretability, ease of use, and ability to handle both categorical and continuous data. However, they also have some limitations, such as a tendency to overfit and sensitivity to small changes in the input data.

Machine Learning Models

There are many different types of machine learning models, each with their own strengths and weaknesses. Here are some of the most common types of machine learning models:

Linear regression:

A type of regression analysis used for predicting continuous values. It involves fitting a linear equation to a set of input and output variables.

Logistic regression:

A type of regression analysis used for predicting binary outcomes. It involves fitting a logistic function to a set of input and output variables.

Decision trees:

A type of supervised learning algorithm used for classification and regression problems. It involves splitting the input data into a tree-like structure, where each node represents a decision based on one or more input variables.

Random forests:

An ensemble learning method that combines multiple decision trees to improve accuracy and reduce overfitting.

Support vector machines (SVMs):

A type of supervised learning algorithm used for classification and regression problems. It involves finding the hyperplane that maximally separates the input data into different classes.

Neural networks:

A type of machine learning model inspired by the structure of the human brain. It involves layers of interconnected nodes, each performing a simple calculation, to learn complex patterns in the input data.

K-nearest neighbors (KNN):

A type of lazy learning algorithm used for classification and regression problems. It involves finding the k nearest neighbors in the input data to predict the output variable.

Naive Bayes:

A type of probabilistic model used for classification problems. It involves calculating the probability of each class given the input variables and selecting the class with the highest probability.

How to choose ML models based upon application

Choosing the right machine learning model for a particular application depends on several factors, including the nature of the problem, the type and amount of data available, and the desired outcome. Here are some general guidelines for selecting a model based on the application:

Regression problems:

For problems involving continuous output variables, linear regression, polynomial regression, or regression trees can be used depending on the nature of the data and the complexity of the problem.

Classification problems:

For problems involving discrete output variables, decision trees, random forests, SVMs, or neural networks can be used depending on the nature of the data and the complexity of the problem.

Unsupervised learning problems:

For problems involving clustering or dimensionality reduction, K-means clustering, hierarchical clustering, principal component analysis (PCA), or autoencoders can be used depending on the nature of the data and the desired outcome.

Natural language processing problems:

For problems involving text data, naive Bayes, support vector machines, or recurrent neural networks can be used for classification and language modeling tasks.

Image or video analysis problems:

For problems involving image or video data, convolutional neural networks (CNNs) are widely used due to their ability to learn spatial features in the data.

AI Vs Machine Learning

Artificial intelligence (AI) and machine learning (ML) are related but distinct concepts. AI is a broad field of computer science that aims to create intelligent machines that can perform tasks that typically require human-level intelligence, such as natural language processing, problem solving, and decision making. Machine learning is a subset of AI that involves training algorithms to make predictions or decisions based on input data.

AI, on the other hand, encompasses a much broader range of technologies, including machine learning, natural language processing, computer vision, robotics, and more. AI aims to create intelligent machines that can perform a wide range of tasks, not just those that involve data analysis and prediction.

Machine Learning Python | Python Machine Learning

Python is one of the most popular programming languages used in machine learning due to its simplicity, flexibility, and powerful libraries such as NumPy, Pandas, Matplotlib, and Scikit-learn. Here are some of the key steps involved in using Python for machine learning:

Data preparation:

This involves loading and preprocessing data for use in machine learning models. This can be done using libraries such as NumPy and Pandas.

Model selection and training:

This involves selecting an appropriate machine learning model and training it on the prepared data. Scikit-learn is a popular library for machine learning in Python, and it provides a wide range of machine learning algorithms for classification, regression, clustering, and more.

Model evaluation:

Once a model has been trained, it is important to evaluate its performance using metrics such as accuracy, precision, recall, and F1 score. This can be done using libraries such as Scikit-learn and Matplotlib.

Model tuning:

It may be necessary to tune the hyperparameters of a machine learning model to optimize its performance. This can be done using techniques such as grid search and cross-validation, which are provided by Scikit-learn.

Deployment:

Once a model has been trained and tuned, it can be deployed in production environments to make predictions on new data. This can be done using libraries such as Flask, which provide a framework for building web applications that can integrate with machine learning models.

Logistic Regression Machine Learning

Logistic regression is a supervised learning algorithm used for binary classification tasks, where the goal is to predict the probability that a given input belongs to one of two classes. It is commonly used in machine learning for tasks such as fraud detection, spam filtering, and medical diagnosis.

In logistic regression, the input data is represented by a set of features or predictors, and the output is a binary class label (e.g., 0 or 1). The algorithm works by first fitting a linear regression model to the input data, and then applying a sigmoid function to the output of the linear regression to convert it into a probability value between 0 and 1.

The logistic regression model can then be trained using an optimization algorithm such as gradient descent to minimize the loss function, which measures the difference between the predicted probability and the true class label. Once the model has been trained, it can be used to make predictions on new input data by computing the probability that the input belongs to the positive class.

Logistic regression is a simple and interpretable algorithm that is often used as a baseline model in machine learning tasks. However, it may not perform well on datasets with complex relationships between the features and the class label, and other algorithms such as decision trees and neural networks may be more appropriate.

Random Forest Algorithm in Machine Learning

Random forest is a popular machine learning algorithm that belongs to the family of ensemble learning methods. It combines multiple decision trees to make a final prediction, making it more robust and accurate than a single decision tree. Here's how it works:

Data preparation:

The input data is split into training and testing datasets, and the features are preprocessed and normalized as needed.

Random sampling:

A random subset of the training data is selected, with replacement, to train each decision tree. This process is called bootstrapping.

Decision tree training:

A decision tree is trained on the bootstrapped subset of the data, using a random subset of the features at each node.

Ensemble construction:

Multiple decision trees are trained in this way, using different bootstrapped subsets and feature subsets. This creates an ensemble of decision trees that work together to make a final prediction.

Prediction:

To make a prediction for a new input, the ensemble of decision trees is used to generate a set of predictions, and the most common prediction is taken as the final output.

The advantages of using a random forest algorithm are:

It can handle a large number of input features and can effectively capture complex relationships between them.

It is less prone to overfitting than a single decision tree, as the ensemble of trees helps to reduce variance and increase accuracy.

It can provide feature importance scores, which can help identify the most important features for a given task.

Random forest has many applications, including classification, regression, and feature selection tasks. It is widely used in areas such as finance, healthcare, and marketing.

Cross Validation Machine Learning

Cross-validation is a technique used in machine learning to evaluate the performance of a model on unseen data. It is particularly useful for avoiding overfitting, which is when a model performs well on the training data but poorly on new, unseen data.

In cross-validation, the available data is divided into k subsets of approximately equal size. The model is then trained on k-1 of these subsets and tested on the remaining subset. This process is repeated k times, with each subset being used for testing exactly once. The results from each iteration are then averaged to give an overall performance estimate.

The most common form of cross-validation is k-fold cross-validation, where k is typically set to 5 or 10. In k-fold cross-validation, the data is divided into k subsets, and the process described above is repeated k times, with each subset being used for testing exactly once.

The advantages of using cross-validation include:

It provides a more reliable estimate of a model's performance on new, unseen data than using a single train-test split.

It helps to avoid overfitting by evaluating the model's ability to generalize to new data.

It can help to optimize model hyperparameters, such as regularization strength, by testing different hyperparameters on different subsets of the data.

Overall, cross-validation is an important technique in machine learning for evaluating and optimizing models, and it should be used whenever possible to ensure the reliability and generalizability of the model.

Clustering in Machine Learning

Clustering is a type of unsupervised learning in machine learning, where the goal is to group similar data points together into clusters, based on their feature similarities. Clustering algorithms can help discover patterns and structures in data that may not be immediately apparent.

There are several types of clustering algorithms, including:

K-means clustering: In this algorithm, the data is partitioned into k clusters, with each cluster represented by its centroid. The algorithm iteratively assigns data points to the nearest centroid, and then updates the centroids to minimize the sum of the squared distances between each data point and its assigned centroid.

Hierarchical clustering: This algorithm creates a hierarchy of clusters, with each cluster being nested inside another. The algorithm can be agglomerative, where each data point starts as its own cluster and is then progressively merged into larger clusters, or divisive, where all data points start in a single cluster and are then recursively split into smaller clusters.

Density-based clustering: This algorithm clusters data points based on their density, with each cluster being defined as a region of high density surrounded by a region of low density. The most commonly used density-based clustering algorithm is DBSCAN (Density-Based Spatial Clustering of Applications with Noise).

Clustering has many applications, such as customer segmentation, anomaly detection, and image segmentation. It can also be used as a preprocessing step for supervised learning tasks, such as classification and regression.

The effectiveness of clustering algorithms depends on the choice of distance metric, the number of clusters, and the choice of algorithm. It is important to choose the right algorithm and parameters for the given task to ensure the best results.

SVM in Machine Learning | Support Vector Machine in Machine Learning

Support Vector Machines (SVMs) are a type of supervised learning algorithm used for classification and regression analysis. In SVM, the goal is to find the hyperplane that best separates the data into different classes.

The main idea behind SVM is to find the hyperplane that maximizes the margin between the classes. The margin is defined as the distance between the hyperplane and the nearest data points from each class. The data points closest to the hyperplane are called support vectors, and they play an important role in the algorithm.

The SVM algorithm tries to find the hyperplane that maximizes the margin, while still correctly classifying all data points. When the data is not linearly separable, the SVM algorithm can still be used by transforming the data into a higher dimensional space, where a linear hyperplane can be found.

SVM can be used for both classification and regression problems. In classification, SVM is used to predict which class a new data point belongs to. In regression, SVM is used to predict a continuous output variable.

SVM has several advantages over other classification algorithms, including:

- It works well in high-dimensional spaces and can handle a large number of features.

- It is effective in cases where the number of dimensions is greater than the number of samples.

- It is memory efficient because it uses only a subset of the training points in the decision function.

- It has a regularization parameter that can be adjusted to avoid overfitting.

- However, SVM also has some limitations, such as:

- It can be slow to train on large datasets.

- It may be sensitive to the choice of kernel function.

- It can be difficult to interpret the results and explain the decision-making process.

Overall, SVM is a powerful and widely used algorithm in machine learning, especially for classification problems with high-dimensional data.

PCA in Machine Learning

Principal Component Analysis (PCA) is a technique used for dimensionality reduction in machine learning. The goal of PCA is to transform the original data into a new coordinate system, where the dimensions (called principal components) are uncorrelated and capture the maximum amount of variance in the data.

PCA works by finding the directions (or vectors) in the data that have the maximum variance. These directions correspond to the principal components. The first principal component is the direction that captures the maximum amount of variance in the data. The second principal component is the direction that captures the maximum amount of variance in the data, subject to being orthogonal to the first principal component, and so on.

PCA can be used for various purposes, such as data compression, noise reduction, and feature extraction. In particular, PCA can be used for feature extraction by projecting the original data onto a lower-dimensional subspace spanned by the first few principal components. This can help reduce the computational complexity of machine learning algorithms, improve model performance, and make the data easier to visualize.

PCA has several advantages, such as:

- It reduces the number of dimensions of the data, making it easier to visualize and work with.

- It can help remove noise and redundant information from the data.

- It can help identify important patterns and relationships in the data.

- However, PCA also has some limitations, such as:

- It may not be suitable for all types of data.

- It assumes that the principal components are orthogonal to each other, which may not always be the case.

- It can be difficult to interpret the results and explain the underlying patterns in the data.

Confusion Matrix in Machine Learning

A confusion matrix is a table that is often used to evaluate the performance of a machine learning algorithm. It is also known as an error matrix. The table is made up of four values: true positives (TP), false positives (FP), true negatives (TN), and false negatives (FN). The rows of the table represent the actual values, while the columns represent the predicted values.

Here's what each value in the confusion matrix represents:

- True positives (TP): The number of positive samples that were correctly classified as positive.

- False positives (FP): The number of negative samples that were incorrectly classified as positive.

- True negatives (TN): The number of negative samples that were correctly classified as negative.

- False negatives (FN): The number of positive samples that were incorrectly classified as negative.

The confusion matrix can be used to calculate several performance metrics for a machine learning algorithm, such as accuracy, precision, recall, and F1 score. These metrics provide a measure of how well the algorithm is performing and can help identify areas for improvement.

Here's how the metrics are calculated:

Accuracy: The proportion of true results (both true positives and true negatives) among the total number of cases examined. It is calculated as (TP+TN)/(TP+TN+FP+FN).

Precision: The proportion of true positives among the cases that were classified as positive. It is calculated as TP/(TP+FP).

Recall: The proportion of true positives among the actual positive cases. It is calculated as TP/(TP+FN).

F1 score: The harmonic mean of precision and recall. It is calculated as 2*(precision*recall)/(precision+recall).

Regularization in Machine Learning

Regularization is a technique used in machine learning to prevent overfitting of the model to the training data. Overfitting occurs when the model fits the training data too closely, and as a result, performs poorly on new, unseen data. Regularization methods add a penalty term to the loss function, which helps to control the complexity of the model and prevent overfitting.

There are several types of regularization techniques in machine learning, including:

L1 regularization (Lasso regularization):

This method adds a penalty term proportional to the absolute value of the coefficients. This has the effect of shrinking some coefficients to zero, effectively performing feature selection.

L2 regularization (Ridge regularization):

This method adds a penalty term proportional to the square of the coefficients. This has the effect of shrinking the coefficients towards zero, but not to zero.

Elastic Net regularization:

This method is a combination of L1 and L2 regularization. It adds both penalties to the loss function.

The choice of regularization technique depends on the specific problem and data set. Regularization helps to improve the generalization performance of the model, by reducing the risk of overfitting, and can result in better performance on new, unseen data.

Neural Networks Machine Learning

Neural networks are a class of machine learning algorithms that are modeled after the structure and function of the human brain. They are composed of layers of interconnected nodes or "neurons" that process information and make predictions.

Neural networks are used for a wide range of applications, including image recognition, natural language processing, and predictive analytics. They are particularly well-suited for problems that involve complex, nonlinear relationships between inputs and outputs.

There are several types of neural networks, including:

Feedforward neural networks:

These are the simplest type of neural network, with information flowing in only one direction, from input to output. They are often used for classification problems.

Recurrent neural networks:

These networks have connections that allow feedback loops, which means they can process sequential data such as time series data or natural language processing tasks.

Convolutional neural networks:

These networks are designed to process images and are used for image recognition tasks.

Generative adversarial networks:

These networks are used to generate new data that is similar to a given dataset.

Training a neural network involves adjusting the weights and biases of the neurons to minimize the error between the predicted output and the actual output. This is typically done using an algorithm called backpropagation, which calculates the gradient of the loss function with respect to the weights and biases.

Neural networks have achieved state-of-the-art performance in many areas of machine learning, and are often used in conjunction with other techniques such as deep learning and reinforcement learning. However, training neural networks can be computationally expensive, and proper tuning of hyperparameters is often necessary to achieve good performance.

Python Machine Learning

Python is a popular programming language for machine learning due to its ease of use, large community, and availability of powerful libraries such as NumPy, Pandas, Scikit-Learn, TensorFlow, and Keras.

In Python, you can use a variety of machine learning algorithms, including:

Regression algorithms:

These are used to predict a continuous value, such as the price of a house or the age of a person.

Classification algorithms:

These are used to predict a categorical value, such as whether an email is spam or not.

Clustering algorithms:

These are used to group similar data points together based on their features.

Dimensionality reduction algorithms:

These are used to reduce the number of features in a dataset while retaining as much information as possible.

To perform machine learning in Python, you typically follow these steps:

- Import the necessary libraries and datasets.

- Preprocess the data, such as handling missing values, scaling the features, and encoding categorical variables.

- Split the data into training and testing sets.

- Train the model on the training data.

- Evaluate the model on the testing data and make predictions on new data.

- Fine-tune the hyperparameters of the model using techniques such as cross-validation.

- Deploy the model in a production environment.

Python provides a user-friendly and flexible environment for implementing machine learning algorithms and models. With its vast array of libraries and tools, it has become one of the most widely used languages for machine learning.

Deep Learning vs Machine Learning

Deep learning is a subset of machine learning that involves building and training neural networks with multiple layers. While both deep learning and machine learning are types of artificial intelligence, they differ in their approach and applications.

Machine learning algorithms are typically designed to learn from data, make predictions, and improve performance over time. These algorithms can be used for a variety of tasks, such as classification, regression, and clustering. The learning process involves feeding the algorithm with training data and using statistical techniques to identify patterns and relationships in the data.

Deep learning, on the other hand, is a type of machine learning that involves the use of neural networks with multiple layers. These layers process the data in a hierarchical manner, with each layer building on the previous one to learn more complex features and relationships. Deep learning is particularly useful for tasks such as image recognition, speech recognition, and natural language processing.

Feature Selection in Machine Learning

Feature selection is an important step in machine learning that involves selecting a subset of relevant features from a larger set of available features. The aim is to reduce the dimensionality of the data and improve the performance of the machine learning algorithm.

There are various techniques for feature selection in machine learning, including:

Filter methods:

These involve ranking the features based on statistical measures such as correlation, mutual information, or chi-square test, and selecting the top-ranked features. This approach is computationally efficient and can be applied before training the model.

Wrapper methods:

These involve evaluating the performance of the model on different subsets of features and selecting the subset that yields the best performance. This approach is more computationally expensive but can result in better performance.

Embedded methods:

These involve incorporating feature selection into the model training process itself, such as using regularization techniques like L1 and L2 regularization in linear models or decision trees.

Some factors to consider when selecting features include:

Relevance:

The selected features should be relevant to the task at hand and have a strong relationship with the target variable.

Redundancy:

The selected features should not be redundant or highly correlated with each other.

Interpretability:

The selected features should be easy to interpret and provide insights into the underlying data.

Python libraries for Machine Learning

There are several Python libraries available for machine learning. Here are some of the most commonly used libraries:

NumPy:

NumPy is a library for numerical computing in Python. It provides support for large, multi-dimensional arrays and matrices, as well as a variety of mathematical functions.

Pandas:

Pandas is a library for data manipulation and analysis. It provides support for data frames and tables, as well as functions for cleaning, transforming, and merging data.

Scikit-learn:

Scikit-learn is a machine learning library that provides a range of tools for data mining and analysis. It includes support for various machine learning algorithms, including classification, regression, clustering, and dimensionality reduction.

TensorFlow:

TensorFlow is a library for numerical computation and machine learning. It is designed to support the creation and training of deep learning models, including neural networks.

Keras:

Keras is a high-level neural network library that is built on top of TensorFlow. It provides a simple and intuitive interface for building and training deep learning models.

PyTorch:

PyTorch is a machine learning library that provides support for deep learning models, including neural networks. It includes support for automatic differentiation and dynamic computation graphs.

Matplotlib:

Matplotlib is a plotting library for Python. It provides support for creating a wide range of visualizations, including line plots, scatter plots, and histograms.

Ensemble Learning in Machine Learning

Ensemble learning is a technique in machine learning that combines multiple individual models to improve the overall performance of the model. The basic idea behind ensemble learning is to create a group of models that can work together to produce more accurate predictions than any single model on its own.

There are several popular techniques for ensemble learning in machine learning, including:

Bagging:

This technique involves training multiple instances of the same model on different subsets of the training data, and then combining their predictions through averaging or voting.

Boosting:

This technique involves training multiple weak models in sequence, with each model focused on correcting the errors made by the previous model.

Stacking:

This technique involves training multiple different models on the same data, and then using a meta-model to combine their predictions.

Ensemble learning can be a powerful tool for improving the accuracy and robustness of machine learning models, particularly in cases where individual models may be prone to overfitting or other forms of bias. However, it also requires careful consideration of issues like model diversity, bias-variance trade-offs, and scalability, as well as a deep understanding of the underlying models and data.

Data Science vs Machine Learning

Data science and machine learning are two closely related fields, but they are not the same thing.

Data science is a broad field that encompasses a range of techniques for extracting insights and knowledge from data. This includes techniques for data cleaning, data visualization, statistical analysis, and machine learning. Data science is concerned with using data to answer questions, identify patterns, and make predictions about the future.

Machine learning, on the other hand, is a subset of data science that focuses specifically on developing algorithms that can learn from data and make predictions or decisions without being explicitly programmed. Machine learning algorithms can be supervised (where the model is trained on labeled data), unsupervised (where the model learns patterns in unlabeled data), or semi-supervised (where the model is trained on a combination of labeled and unlabeled data).

In other words, data science is a broader field that includes machine learning, while machine learning is a specific subset of data science that focuses on building predictive models.

Data scientists often use machine learning as a tool to solve problems and gain insights from data, but they may also use other techniques such as statistical analysis, data visualization, and data mining. Machine learning engineers, on the other hand, focus specifically on building and optimizing machine learning models.

Overall, both data science and machine learning are important fields that play a key role in helping organizations make data-driven decisions and gain insights from their data.

UCI Machine Learning Repository

The UCI Machine Learning Repository is a collection of databases, domain theories, and data generators that are used by researchers in the field of machine learning for the empirical analysis of algorithms and the development of predictive models.

The repository was created in 1987 by the Center for Machine Learning and Intelligent Systems at the University of California, Irvine (UCI) and contains a large collection of datasets that cover a wide range of topics such as medicine, social sciences, engineering, and physical sciences. These datasets are available for free and can be used by researchers and students for their own research and education purposes.

The UCI Machine Learning Repository also provides a platform for researchers to share their own datasets with the machine learning community, which can help promote the development and evaluation of new machine learning algorithms and techniques.

Some of the popular datasets available on the UCI Machine Learning Repository include the Iris dataset (used for classification), the Wine dataset (used for classification), and the Boston Housing dataset (used for regression). The repository also contains datasets for clustering, image recognition, and text classification, among other topics.

Overall, the UCI Machine Learning Repository is a valuable resource for researchers and students in the field of machine learning, providing a wide range of datasets that can be used to develop and evaluate new machine learning algorithms and techniques.

Overfitting and Underfitting in Machine Learning

Overfitting and underfitting are two common problems in machine learning where a model is not able to generalize well to new, unseen data.

Overfitting occurs when a model is too complex and is able to fit the training data very closely, but performs poorly on new, unseen data. This is because the model has learned the noise and the idiosyncrasies of the training data rather than the underlying patterns that generalize to new data. Overfitting can be mitigated by using techniques like regularization, early stopping, and dropout, which can simplify the model and prevent it from overfitting.

Underfitting occurs when a model is too simple and is not able to capture the underlying patterns in the data, resulting in poor performance on both the training and test data. This can happen when the model is not complex enough to capture the complexity of the data, or when the training data is too small or not representative of the underlying population. Underfitting can be addressed by increasing the complexity of the model, adding more features, or collecting more data.

To strike a balance between overfitting and underfitting, it's important to monitor the performance of the model on both the training and test data and adjust the model accordingly. This can be done by using techniques like cross-validation, which can help identify the optimal level of model complexity that generalizes well to new data.

Boosting in Machine Learning

Boosting is a machine learning technique that combines multiple weak models to create a stronger and more accurate model. It is a type of ensemble learning method, where multiple models are trained on the same dataset and their outputs are combined to make a final prediction.

The basic idea of boosting is to sequentially train a series of weak models, each of which is focused on correcting the errors made by the previous model. The final prediction is then made by combining the predictions of all the weak models. The process of training these weak models is done by assigning weights to the training samples, with more emphasis placed on the samples that were misclassified by the previous models.

The most popular algorithm for boosting is AdaBoost (Adaptive Boosting), which was introduced by Freund and Schapire in 1997. AdaBoost works by training a series of weak classifiers on the training data, with each classifier focused on correcting the errors made by the previous classifiers. The final prediction is made by combining the predictions of all the weak classifiers, with more weight given to the classifiers that perform better on the training data.

Other popular boosting algorithms include Gradient Boosting, XGBoost, and LightGBM, which have been shown to achieve state-of-the-art performance on many machine learning tasks. Boosting is a powerful technique that can be used for both regression and classification problems, and it is widely used in industry and academia for a variety of applications.

Machine Learning for Kids

Machine learning can be a complex and technical field, but there are resources available to teach kids the basics of machine learning in a fun and engaging way. Here are some ways kids can learn about machine learning:

Scratch:

Scratch is a block-based visual programming language developed by MIT, which provides a simple and interactive platform for kids to learn about programming and machine learning. It includes a variety of tools and resources for teaching machine learning concepts, such as interactive games and tutorials.

Code.org:

Code.org provides a range of resources for teaching kids about computer science and programming, including a section on machine learning. It includes free, interactive activities and lessons designed to introduce kids to the basic concepts of machine learning, such as data collection and analysis, decision-making, and prediction.

Machine learning kits:

There are a variety of machine learning kits available on the market, designed specifically for kids. These kits include hands-on activities that teach kids about the basics of machine learning, such as building a robot that can navigate through a maze or using sensors to collect and analyze data.

Online courses:

There are also a variety of online courses and tutorials available that are designed to teach kids about machine learning. These courses typically include interactive videos, quizzes, and hands-on activities to help kids learn the fundamentals of machine learning.

Principal Component Analysis in Machine Learning

Principal Component Analysis (PCA) is a popular unsupervised machine learning technique used for dimensionality reduction. In other words, PCA is used to reduce the number of features or variables in a dataset while still retaining the most important information.

PCA works by identifying the underlying correlations between features in a dataset and transforming them into a new set of uncorrelated variables, called principal components. These principal components are ranked in order of the amount of variance they explain in the original data. The first principal component explains the most variance, followed by the second, and so on.

PCA is commonly used for data preprocessing, as it can help reduce the dimensionality of the data, making it easier to analyze and visualize. It can also be used for feature extraction, as the principal components can often reveal hidden relationships between features that can be useful for building predictive models.

In Python, the scikit-learn library provides an easy-to-use implementation of PCA. Here's an example of how to use PCA in scikit-learn:

python

from sklearn.decomposition import PCA

# Create a PCA object

pca = PCA(n_components=2)

# Fit the PCA model to the data

pca.fit(X)

# Transform the data into the new feature space

X_pca = pca.transform(X)

In this example, we create a PCA object and set the number of components we want to reduce the data to (in this case, 2). We then fit the PCA model to the data and transform it into the new feature space. The resulting X_pca array contains the transformed data with the reduced number of dimensions.

Bias and Variance in Machine Learning

Bias and variance are two important concepts in machine learning that relate to the accuracy and generalization ability of a predictive model.

Bias refers to the difference between the predicted values of the model and the true values of the data. A high bias indicates that the model is unable to capture the true patterns in the data and is underfitting. This can happen when the model is too simple or the data is too noisy.

Variance, on the other hand, refers to the variability of the model's predictions for different input values. A high variance indicates that the model is too complex and is overfitting to the training data. This can happen when the model is too flexible or there is not enough data to train the model.

Ideally, we want to find a balance between bias and variance that minimizes the total error of the model. This is often referred to as the bias-variance tradeoff.

To diagnose bias and variance issues in a model, we can use techniques like cross-validation and learning curves. Cross-validation can help us determine whether a model is overfitting or underfitting, while learning curves can help us visualize the bias-variance tradeoff.

In general, to reduce bias, we can try increasing the complexity of the model or adding more features to the data. To reduce variance, we can try reducing the complexity of the model or adding more data to the training set. Regularization techniques like L1 and L2 regularization can also be used to balance bias and variance.

Perceptron in Machine Learning

The perceptron is a type of linear binary classifier in machine learning. It was invented in the 1950s by Frank Rosenblatt, and is considered to be one of the earliest models of artificial neural networks.

At its core, the perceptron is a single-layer neural network that takes in multiple inputs and produces a single binary output. The inputs are weighted, and the sum of the weighted inputs is passed through an activation function, which produces the output.

The weights of the inputs are adjusted during the learning process using a technique called stochastic gradient descent, in which the error between the predicted output and the true output is minimized by adjusting the weights.

The perceptron algorithm is designed to learn from labeled data, and it can be used for both classification and regression tasks. It is a simple and fast algorithm that can be trained on large datasets, but it has some limitations. For example, it can only classify linearly separable data, which means that it cannot handle complex or non-linear relationships between the input and output variables.

Despite its limitations, the perceptron algorithm has been used in many applications, such as image recognition, speech recognition, and natural language processing. It has also served as a building block for more advanced neural network architectures.

Dimensionality Reduction in Machine Learning

Dimensionality reduction is the process of reducing the number of features in a dataset while retaining as much information as possible. This is often done in machine learning to improve the performance of models, reduce computational complexity, and eliminate noise and redundancy.

One common technique for dimensionality reduction is principal component analysis (PCA). PCA works by finding the principal components of a dataset, which are the directions in which the data varies the most. These principal components are used to create a new set of features that capture the most important information in the original data.

Another technique for dimensionality reduction is linear discriminant analysis (LDA). LDA is a supervised technique that maximizes the separation between classes in the data while reducing the dimensionality. It works by finding the linear combination of features that best separate the classes.

Other techniques for dimensionality reduction include t-distributed stochastic neighbor embedding (t-SNE), which is often used for visualizing high-dimensional data, and autoencoders, which are neural networks that can be trained to reconstruct high-dimensional data using a lower-dimensional representation.

Dimensionality reduction is a powerful technique for reducing the complexity of datasets and improving the performance of machine learning models. However, it should be used with caution, as it can also lead to information loss and decreased interpretability of the data.

Genetic Algorithm In Machine Learning

A genetic algorithm is a type of optimization algorithm inspired by the process of natural selection and genetic inheritance in biological systems. In machine learning, genetic algorithms are used to search for the best possible solution or set of solutions to a given problem.

In a genetic algorithm, a population of potential solutions is randomly generated and then evaluated based on a fitness function that measures how well each solution solves the problem. The solutions are then evolved through a process of selection, crossover, and mutation, similar to how biological organisms pass on their genetic material.

During the selection process, the solutions with the highest fitness values are selected to reproduce and create the next generation of solutions. The crossover process involves taking two parent solutions and combining them to create new offspring solutions that inherit some of the characteristics of each parent. The mutation process involves introducing random changes to some of the offspring solutions to introduce diversity and prevent the algorithm from getting stuck in local optima.

Genetic algorithms can be applied to a wide range of problems, including optimization, classification, and regression. They are particularly useful when the search space is large and complex, and when it is difficult to find the global optimum using traditional optimization techniques.

There are several libraries available in Python for implementing genetic algorithms, including the DEAP (Distributed Evolutionary Algorithms in Python) library and the PyGAD (Python Genetic Algorithm) library.

Machine Learning Projects for Final Year

There are many interesting and challenging machine learning projects that can be taken up for final year projects. Here are a few project ideas:

Sentiment analysis of customer reviews:

Develop a model that can analyze the sentiment of customer reviews for a product or service. This can be used by businesses to identify areas of improvement or to track customer satisfaction over time.

Fraud detection:

Build a model that can detect fraudulent transactions based on historical data. This can be used by financial institutions or e-commerce businesses to prevent fraudulent activity.

Image recognition:

Develop a model that can identify objects or people in images. This can be used in a variety of applications, such as surveillance systems or autonomous vehicles.

Predicting stock prices:

Build a model that can predict stock prices based on historical data. This can be useful for investors or financial analysts.

Customer segmentation:

Develop a model that can segment customers based on their behavior or demographics. This can be used by businesses to personalize marketing campaigns or to identify high-value customers.

Recommendation systems:

Build a model that can recommend products or services to users based on their behavior or preferences. This can be used by e-commerce businesses or streaming services.

Speech recognition:

Develop a model that can transcribe speech into text. This can be used in applications such as voice assistants or transcription services.

Predictive maintenance:

Build a model that can predict when equipment is likely to fail based on sensor data. This can be used in a variety of industries, such as manufacturing or transportation.

Azure Machine Learning

Azure Machine Learning is a cloud-based machine learning platform that enables developers and data scientists to build, deploy, and manage machine learning models at scale. It provides a variety of tools and services to create, train, and deploy machine learning models in the cloud or at the edge.

Azure Machine Learning includes features such as:

- Automated machine learning to automatically build and tune machine learning models.

- Model management to store and track model versions, dependencies, and performance.

- Data preparation and feature engineering to clean, transform, and preprocess data.

- Integration with popular machine learning frameworks, such as TensorFlow and PyTorch.

- Integration with Azure services, such as Azure Databricks, Azure Kubernetes Service, and Azure Stream Analytics.

- Deployment options for deploying models as RESTful web services or as containers to run on-premises, in the cloud, or at the edge.

Azure Machine Learning is used by organizations to build a variety of machine learning models for applications such as fraud detection, predictive maintenance, image classification, and natural language processing.

AWS Machine Learning

AWS (Amazon Web Services) provides several machine learning services that can be used to build intelligent applications. Some of the key AWS machine learning services are:

Amazon SageMaker -

A fully-managed machine learning service that enables data scientists and developers to build, train, and deploy machine learning models at scale.

Amazon Rekognition -

A fully-managed computer vision service that provides APIs to detect and analyze faces, objects, scenes, and activities in images and videos.

Amazon Comprehend -

A natural language processing (NLP) service that uses machine learning to uncover insights and relationships in text.

Amazon Polly -

A text-to-speech service that uses machine learning to turn text into lifelike speech.

Amazon Transcribe -

A speech recognition service that uses machine learning to convert speech to text.

Amazon Translate -

A language translation service that uses machine learning to provide high-quality, low-cost translation between languages.

AWS also provides several other services that can be used in conjunction with machine learning, such as Amazon S3 for storage, AWS Lambda for serverless computing, and AWS Glue for ETL (extract, transform, load) jobs.

Reddit Machine Learning

Reddit is a social media platform that has a large community dedicated to machine learning. The subreddit "r/MachineLearning" is a popular forum where people can discuss topics related to machine learning, such as news, research, applications, and tutorials. It's a great resource for people who want to learn more about machine learning, get feedback on their projects, or find job opportunities in the field. Additionally, there are several other subreddits related to machine learning and artificial intelligence, such as "r/learnmachinelearning" and "r/artificial".

NLP Machine Learning

Natural Language Processing (NLP) is a field of machine learning that focuses on the interaction between computers and human language. It involves developing algorithms and models that can understand, analyze, and generate natural language text or speech. Some common tasks in NLP include sentiment analysis, language translation, question answering, chatbot development, and text classification.

NLP techniques typically involve applying machine learning models such as supervised learning, unsupervised learning, and deep learning to textual data. Popular NLP libraries and frameworks in Python include NLTK, SpaCy, Gensim, TensorFlow, and PyTorch. These tools provide pre-built models and algorithms for various NLP tasks, as well as APIs for training custom models using your own data.

NLP is a rapidly growing field with many applications across industries such as healthcare, finance, customer service, and marketing. With the increasing amount of textual data generated by social media, web pages, and other sources, NLP is becoming an essential tool for extracting insights and making informed decisions from large volumes of unstructured data.

Google Machine Learning

Google offers a wide range of machine learning products and services, including:

Google Cloud Machine Learning Engine:

A managed service that allows developers to build and deploy machine learning models at scale.

TensorFlow:

An open-source machine learning library developed by Google, which is widely used in industry and academia.

Google Cloud AutoML:

A suite of tools that enable businesses with limited machine learning expertise to build custom machine learning models.

Google Cloud Vision API:

A pre-trained machine learning model that can detect and classify objects in images.

Google Cloud Natural Language API:

A pre-trained machine learning model that can analyze and classify text data.

Google Cloud Translation API:

A pre-trained machine learning model that can translate text between different languages.

Google Cloud Speech-to-Text API:

A pre-trained machine learning model that can transcribe speech into text.

These products and services can be used for a wide range of applications, including image and speech recognition, natural language processing, and predictive analytics.

Machine Learning Tools

There are many tools available for machine learning, both open source and commercial. Here are some popular ones:

Python libraries:

Python is a popular programming language for machine learning, and there are many libraries available such as Scikit-learn, TensorFlow, Keras, PyTorch, Pandas, NumPy, Matplotlib, etc.

R language:

R is another popular language for machine learning, and it has many packages available such as caret, e1071, randomForest, etc.

Weka:

Weka is a popular open source data mining tool that provides a graphical user interface to perform machine learning tasks.

KNIME:

KNIME is a data analytics platform that allows users to build data pipelines, perform data analysis, and create machine learning models.

RapidMiner:

RapidMiner is a data science platform that provides an integrated environment for data preparation, machine learning, and predictive analytics.

Microsoft Azure Machine Learning Studio:

It is a cloud-based service that provides an integrated environment for building, deploying, and managing machine learning models.

Google Cloud Machine Learning Engine:

It is a cloud-based service that allows users to build, train, and deploy machine learning models on Google Cloud Platform.

Amazon SageMaker: